Hi, I’m Tom, DownPat’s Chief AI officer, and this is my first post! In addition to the normal/generic duties of a data scientist, my role is to evaluate how well the AI tools we develop and use are working. As a brief reminder, DownPat works with self-improvement experts to build conversational practice exercises based on their teachings, which include feedback from an AI Coach on how well the User does.

One important aspect of building practice exercises with our experts is evaluating if the exercise’s Large Language Model (LLM) is working well enough. This can be done manually by the expert and our team playing through the exercise, but as experts create dozens (and eventually, hundreds) of exercises, this doesn’t scale well with our limited time. In addition to testing if newly created exercises are ready, there is also a need to assess if exercises can work well enough with smaller/cheaper models (ie do we need to run an exercise using GPT-4o, or does it meet a high enough performance score on GPT-4o-mini to use that much cheaper model instead?).

Before considering potential automated solutions, it is important to note that this evaluation problem is different (and more difficult) than the typical evaluation benchmarks used in the industry. An LLM’s open-ended response quality can be judged by systems like Chatbot Arena, MTBench, MixEval, etc but since DownPat is building an LLM-application, we need a set of custom, application-specific evaluations (often colloquially shortened to “Evals” by the way). Since conversation and feedback quality is nebulous to measure (i.e. no single “right” answer), I decided that the “right” approach for us would be to use a LLM-as-a-judge strategy where an evaluator LLM would provide numerical performance scores. With this strategy we can independently evaluate exercises and models. This is in contrast to pairwise response comparison evaluation between two different responses to select the better response; since our problem is determining if a model or response is “good enough” rather than relative rankings, I decided not to pursue the pairwise comparison approach.

I began looking into options for LLM-as-a-Judge with numerical grading by researching some companies and groups that work on application evaluation packages, such as Weave, Parea, and Prometheus. Weave is part of the widely used Weights & Biases platform and has a wide array of functionality on tracing to help identify an issue’s root cause and custom evaluation scoring. Parea similarly supports tracing and has implemented many evaluation methods from recent papers (for example, Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena). Prometheus is a research group focused on prompting methods, fine tuning models, and benchmarks for fine-grained evaluation scoring.

Ultimately, I determined that none of these options were quite what we needed (though Weave might have been flexible enough to support it; I will revisit one day), and in particular I could not find any packages or methods specific to open-ended chatbot dialogue analysis (please let me know if I missed one!). I decided to spend a few weeks building a custom solution myself, especially since this would give me total control and flexibility (in terms of models, grading scale, prompt engineering, etc). The bulk of the work for that was actually building out functions and pipelines to pull conversation data from our Firestore database and transform it into the formats needed for inclusion into the evaluation prompt (which we would have had to do anyway no matter which package/method we chose).

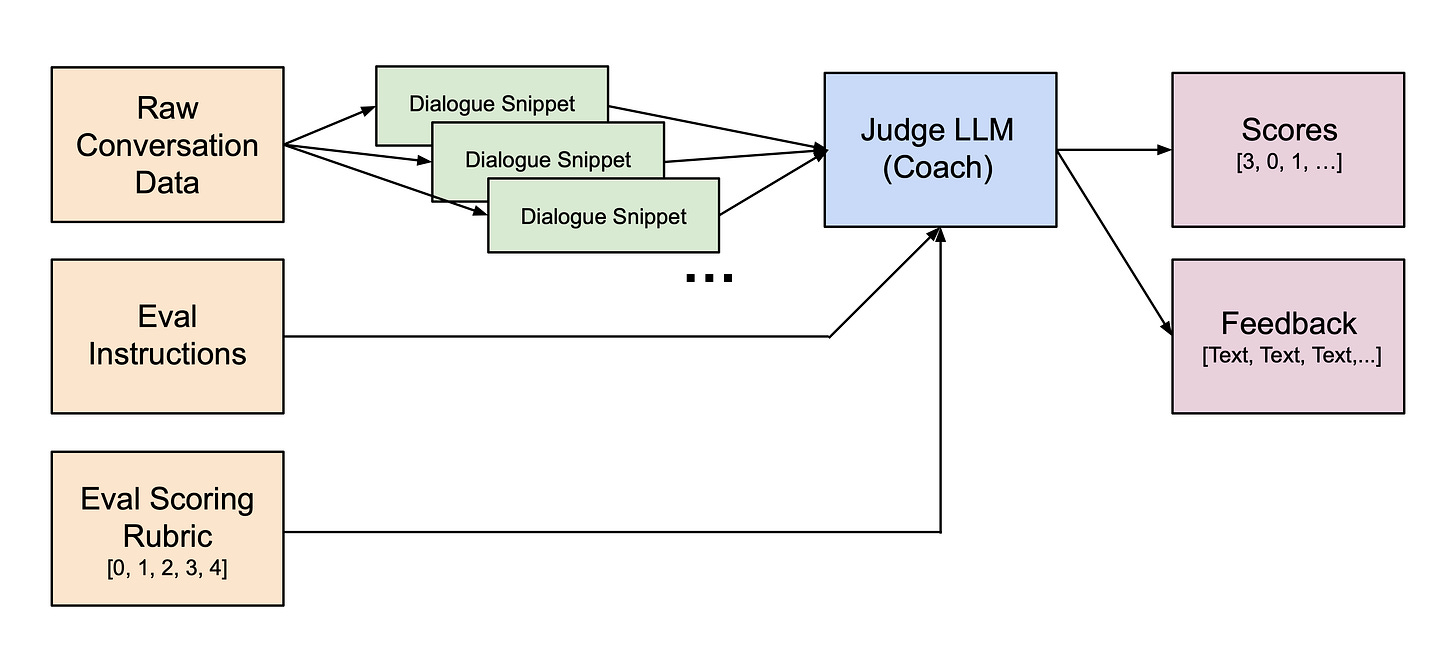

Based on my reading of papers with numerical scoring (S Kim et al 2023, TS Kim et al 2023, Gao et al 2023, Huang et al 2024, Liu et al 2023), I decided to go with a method where a Judge LLM is provided the conversation responses or feedback to analyze, a set of instructions specific to the exercise, and a integer scoring rubric that goes from 0 to a user-selected positive integer (basically a type of Likert scale). In addition to the rubric, one has to provide the instructions and a portion of the exercise conversation to evaluate in the prompt. The Judge LLM then outputs a score and written feedback for each piece of dialogue it’s given.

I’ll next describe some example evaluations I used to test the process. I created a simple manager-employee conversation exercise based on management principles I read from blog posts and remember from my time as a manager at Uber. I use Open AI’s GPT-4o as the Judge LLM since it now has structured output to provide a separate feedback text and numerical score. These conversations involve a Manager (the User), an Employee (an LLM), and a Coach (an LLM). A typical dialogue snippet looks something like:

Employee: "I feel that my work and contributions aren't being taken seriously."

User: "Ah, that sounds frustrating. Tell me more about what's going on?"

Coach: "This is a good start as you're actively listening and inviting the employee to share more about their experience. It's important to let them express themselves fully so you can understand the root cause of their frustration."

To evaluate the Coach’s feedback to the User, we can use a 0-4 scoring rubric:

Criteria: "Does the coach give useful feedback for the manager, specifically referencing parts of the conversation between the user and the employee and reference key concepts?"

Score 0 Description: "The coach's response doesn't provide feedback to the manager or is irrelevant to the manager and employee conversation or asks questions."

Score 1 Description: "The coach's response is relevant to the manager and employee conversation, but is only one or two sentences long or doesn't provide feedback on what the manager said."

Score 2 Description: "The coach's response provides feedback to the manager, is relevant to the manager and employee conversation and is several sentences long, but lacks details or doesn't refer to specific details that were said."

Score 3 Description: "The coach's response provides feedback to the manager, is relevant to the manager and employee conversation and is several sentences long and provides some details and specific references."

Score 4 Description: "The coach's response provides feedback to the manager, is relevant to the manager and employee conversation and is several sentences long and provides excellent, in depth detail and excellent, specific details."

The feedback from the Judge on the Coach is “The coach's response is relevant to the manager and employee conversation and provides feedback on what the manager said. The feedback is several sentences long and includes some details and specific references, such as acknowledging the manager's active listening and the importance of letting the employee express themselves fully. However, the feedback lacks in-depth detail and does not provide excellent, specific details about how the manager can further improve their response or handle the situation” and gives the response a score of 3 (which seems about right).

One issue that we see when using a poorly written prompt or too small an LLM is that the coach or agent will just repeat back what the user said. Here’s an example conversation where I purposely wrote a bad prompt for the coach:

Employee: "I feel that my work and contributions aren't being taken seriously."

User: "Ah, that sounds frustrating. Tell me more about what's going on?"

Coach: "Ah, that sounds frustrating. Tell me more about what's going on?”

The feedback from the Judge on the Coach is "The coach's response is a verbatim repetition of the manager's response to the employee. It does not provide any feedback to the manager, nor does it reference any specific parts of the conversation between the manager and the employee. The response is irrelevant to the task of coaching the manager on how to handle the employee's concerns” and (correctly) gives the response a score of 0 based on the rubric. We can then catch these low score outputs to determine when exercise prompts have not been written well enough for the LLM to play their role. This specific example could of course be caught by a simpler/cheaper string similarity check (since it’s a verbatim repetition), but this approach should be enough to get started on catching some more subtle failure modes that we have seen.

So what’s next? Well, it’s time to start applying these Evals to exercises being built by experts on our platform, iterate on improving the prompts and data pipelines, and add more scores and rubric types. I also plan to give Weave another look and try, especially since the ability to track traces and prompt version control via the Weights & Biases platform may become important for us in the future. And please drop me a line if you’re aware of models and prompting methods for advanced dialogue analysis that go well beyond summarization!